Monte Carlo Methods

Random Variable Generation

Random Variables

Random Variables

Random Number Generator

Random Variable Generations

Transformation Methods

Central Limit Theorem

Random Process

A random process is act of observing an outcome of an event that is unpredictable.

Examples:

Flipping a coin

Rolling a die

Random Variable

A random variable connects the outcomes observed from a random process to a probability space.

Flipping a Coin

| Outcome | Head | Tails |

| Probability | 0.5 | 0.5 |

Rolling a Die

| Outcome | 1 | 2 | 3 | 4 | 5 | 6 |

| Probability | 1/6 | 1/6 | 1/6 | 1/6 | 1/6 | 1/6 |

Discrete Random Variables

A random variable is considered to be discrete if the outcome are only whole numbers (integers).

PMF

The probability mass function of discrete variable can be represented by a formula, table, or a graph. The Probability of a random variable Y can be expressed as \(P(Y=y)\) for all values of \(y\).

Rolling a Die

CDF

The cumulative distribution function provides the \(P(Y\leq y)\) for a random variable \(Y\).

Expected Value

The expected value is the value we expect when we randomly sample from population that follows a specific distribution. The expected value of Y is

\[ E(Y)=\sum_y yP(y) \]

Variance

The variance is the expected squared difference between the random variable and expected value.

\[ Var(Y)=\sum_y\{y-E(Y)\}^2P(y) \]

\[ Var(Y) = E(X^2) - E(X)^2 \]

Known Distributions

| Distribution | Parameter(s) | PMF \(P(Y=y)\) |

|---|---|---|

| Bernoulli | \(p\) | \(p\) |

| Binomial | \(n\) and \(p\) | \((^n_y)p^y(1-p)^{n-p}\) |

| Geometric | \(p\) | \((1-p)^{y-1}p\) |

| Negative Binomial | \(r\) and \(p\) | \((^{y-1}_{r-1})p^{r-1}(1-p)^{y-r}\) |

| Hypergeometric | \(N\), \(n\), and \(r\) | \(\frac{(^r_y)(^{N-r}_{n-y})}{(^N_n)}\) |

| Poisson | \(\lambda\) | \(\frac{\lambda^y}{y!} e^{-\lambda}\) |

Binomial Distribution

An experiment is said to follow a binomial distribution if

- Fixed \(n\)

- Each trial has 2 outcomes

- The probability of success is a constant \(p\)

- The trials are independent of each

\(P(X=x)=(^n_x)p^x(1-p)^{n-x}\)

\(X\) can be any value between 0 to n

\(X \sim Bin(n,p)\)

Bernoulli Distribution (n = 1, p = 0.1; Biased Coin Flip)

Code

p <- 0.1

x <- rbinom(50000, 1, p)

p1 <- x |> tibble() |>

ggplot(aes(x)) +

geom_bar(aes(y = (..count..)/sum(..count..))) +

ylab("Probability") +

ggtitle("PMF") +

theme_bw()

p2 <- 0:1 |> pbinom(1, p) |> tibble(x = 0:1, y = _) |>

ggplot(aes(x,y)) +

geom_step() +

theme_bw() +

ggtitle("CDF") +

ylab(paste0("P(X","\u2264"," x)"))

p1 + p2

Distribution (n = 30, p = 0.1)

Code

p <- 0.1

x <- rbinom(50000, 30, p)

p1 <- x |> tibble() |>

ggplot(aes(x)) +

geom_bar(aes(y = (..count..)/sum(..count..))) +

xlim(c(0,30)) +

ylab("Probability") +

ggtitle("PMF") +

theme_bw()

p2 <- 0:30 |> pbinom(30, p) |> tibble(x = 0:30, y = _) |>

ggplot(aes(x,y)) +

geom_step() +

theme_bw() +

ggtitle("CDF") +

ylab(paste0("P(X","\u2264"," x)"))

p1 + p2

Distribution (n = 30, p = 0.5)

Code

p <- 0.5

x <- rbinom(50000, 30, p)

p1 <- x |> tibble() |>

ggplot(aes(x)) +

geom_bar(aes(y = (..count..)/sum(..count..))) +

xlim(c(0,30)) +

ylab("Probability") +

ggtitle("PMF") +

theme_bw()

p2 <- 0:30 |> pbinom(30, p) |> tibble(x = 0:30, y = _) |>

ggplot(aes(x,y)) +

geom_step() +

theme_bw() +

ggtitle("CDF") +

ylab(paste0("P(X","\u2264"," x)"))

p1 + p2

Distribution (n = 30, p = 0.85)

Code

p <- 0.85

x <- rbinom(50000, 30, p)

p1 <- x |> tibble() |>

ggplot(aes(x)) +

geom_bar(aes(y = (..count..)/sum(..count..))) +

xlim(c(0,30)) +

ylab("Probability") +

ggtitle("PMF") +

theme_bw()

p2 <- 0:30 |> pbinom(30, p) |> tibble(x = 0:30, y = _) |>

ggplot(aes(x,y)) +

geom_step() +

theme_bw() +

ggtitle("CDF") +

ylab(paste0("P(X","\u2264"," x)"))

p1 + p2

Expectations

\[ E(X) = np \]

\[ Var(X) = np(1-p) \]

Poisson Distribution

The poisson distribution describes an experiment that measures that occurrence of an event at specific point and/or time period.

\(P(X=x)=\frac{\lambda^x}{x!}e^{-\lambda}\)

\(X\) can take any value from 0 to \(\infty\)

\(X \sim Pois(\lambda)\)

Distribution (\(\lambda\) = 3.5)

Code

p <- 3.5

x <- rpois(50000, p)

p1 <- x |> tibble() |>

ggplot(aes(x)) +

geom_bar(aes(y = (..count..)/sum(..count..))) +

ylab("Probability") +

ggtitle("PMF") +

theme_bw()

p2 <- 0:max(x) |> ppois(p) |> tibble(x = 0:max(x), y = _) |>

ggplot(aes(x,y)) +

geom_step() +

theme_bw() +

ggtitle("CDF") +

ylab(paste0("P(X","\u2264"," x)"))

p1 + p2

Distribution (\(\lambda\) = 34.5)

Code

p <- 34.5

x <- rpois(50000, p)

p1 <- x |> tibble() |>

ggplot(aes(x)) +

geom_bar(aes(y = (..count..)/sum(..count..))) +

ylab("Probability") +

ggtitle("PMF") +

theme_bw()

p2 <- 0:max(x) |> ppois(p) |> tibble(x = 0:max(x), y = _) |>

ggplot(aes(x,y)) +

geom_step() +

theme_bw() +

ggtitle("CDF") +

ylab(paste0("P(X","\u2264"," x)"))

p1 + p2

Expectations

\[ E(X) = \lambda \]

\[ Var(X) = \lambda \]

Negative Binomial

The negative binomial distribution is a discrete probability distribution that models the number of failures required to achieve a specified number of successes in a sequence of independent and identically distributed Bernoulli trials.

\[ P(X = k) = \binom{k + r - 1}{r - 1} p^r (1 - p)^k \]

\(X\) can take the values from 0 to \(\infty\)

\(X\sim NB(p, r)\)

Expectations

\[ E(X) = \frac{r (1 - p)}{p} \]

\[ \text{Var}(X) = \frac{r (1 - p)}{p^2} \]

Distribution (r = 11, p = 0.1)

Code

p <- 0.1

x <- rnbinom(50000,11, p)

p1 <- x |> tibble() |>

ggplot(aes(x)) +

geom_bar(aes(y = (..count..)/sum(..count..))) +

ylab("Probability") +

ggtitle("PMF") +

theme_bw()

p2 <- 0:max(x) |> pnbinom(11, p) |> tibble(x = 0:max(x), y = _) |>

ggplot(aes(x,y)) +

geom_step() +

theme_bw() +

ggtitle("CDF") +

ylab(paste0("P(X","\u2264"," x)"))

p1 + p2

Distribution (r = 11, p = 0.45)

Code

p <- 0.45

x <- rnbinom(50000, 11, p)

p1 <- x |> tibble() |>

ggplot(aes(x)) +

geom_bar(aes(y = (..count..)/sum(..count..))) +

ylab("Probability") +

ggtitle("PMF") +

theme_bw()

p2 <- 0:max(x) |> pnbinom(11, p) |> tibble(x = 0:max(x), y = _) |>

ggplot(aes(x,y)) +

geom_step() +

theme_bw() +

ggtitle("CDF") +

ylab(paste0("P(X","\u2264"," x)"))

p1 + p2

Distribution (r = 11, p = 0.63)

Code

p <- 0.63

x <- rnbinom(50000, 11, p)

p1 <- x |> tibble() |>

ggplot(aes(x)) +

geom_bar(aes(y = (..count..)/sum(..count..))) +

ylab("Probability") +

ggtitle("PMF") +

theme_bw()

p2 <- 0:max(x) |> pnbinom(11, p) |> tibble(x = 0:max(x), y = _) |>

ggplot(aes(x,y)) +

geom_step() +

theme_bw() +

ggtitle("CDF") +

ylab(paste0("P(X","\u2264"," x)"))

p1 + p2

Continuous Random Variables

A random variable \(X\) is considered continuous if the \(P(X=x)\) does not exist.

CDF

The cumulative distribution function of \(X\) provides the \(P(X\leq x)\), denoted by \(F(x)\), for the domain of \(X\).

Properties of the CDF of \(X\):

- \(F(-\infty)\equiv \lim_{y\rightarrow -\infty}F(y)=0\)

- \(F(\infty)\equiv \lim_{y\rightarrow \infty}F(y)=1\)

- \(F(x)\) is a nondecreaseing function

The probability density function of the random variable \(X\) is given by

\[ f(x)=\frac{dF(x)}{d(x)}=F^\prime(x) \]

wherever the derivative exists.

Properties of pdfs:

- \(f(x)\geq 0\)

- \(\int^\infty_{-\infty}f(x)dx=1\)

- \(P(a\leq X\leq b) = P(a<X<b)=\int^b_af(x)dx\)

Expected Value

The expected value for a continuous distribution is defined as

\[ E(X)=\int x f(x)dx \]

The expectation of a function \(g(X)\) is defined as

\[ E\{g(X)\}=\int g(x)f(x)dx \]

Variance

The variance of continuous variable is defined as

\[ Var(X) = E[\{X-E(X)\}^2] = \int \{X-E(X)\}^2 f(x)dx \]

Uniform Distribution

A random variable is said to follow uniform distribution if the density function is constant between two parameters.

\[ f(x) = \left\{\begin{array}{cc} \frac{1}{b-a} & a \leq x \leq b\\ 0 & \mathrm{elsewhere} \end{array}\right. \]

\(X\) can take any value between \(a\) and \(b\)

\(X \sim U(a,b)\)

Distribution (a = 4, b = 25)

Code

a <- 4

b <- 25

x <- seq(a, b, length.out = 1000)

p1 <- dunif(x, a, b) |> tibble(x = x, y = _) |>

ggplot(aes(x, y)) +

geom_line() +

ylab("Density") +

ggtitle("PDF") +

theme_bw()

p2 <- punif(x, a, b) |> tibble(x = x, y = _) |>

ggplot(aes(x,y)) +

geom_line() +

theme_bw() +

ggtitle("CDF") +

ylab(paste0("P(X","\u2264"," x)"))

p1 + p2

Distribution (a = 0, b = 1)

Code

a <- 0

b <- 1

x <- seq(a, b, length.out = 1000)

p1 <- dunif(x, a, b) |> tibble(x = x, y = _) |>

ggplot(aes(x, y)) +

geom_line() +

ylab("Density") +

ggtitle("PDF") +

theme_bw()

p2 <- punif(x, a, b) |> tibble(x = x, y = _) |>

ggplot(aes(x,y)) +

geom_line() +

theme_bw() +

ggtitle("CDF") +

ylab(paste0("P(X","\u2264"," x)"))

p1 + p2

Expectations

\[ E(X) = \frac{a+b}{2} \]

\[ Var(X) = \frac{1}{12}(b-a)^2 \]

Normal Distribution

A random variable is said to follow a normal distribution if the the frequency of occurrence follow a Gaussian function.

\[ f(x) = \frac{1}{\sqrt{2\pi\sigma^2}}\exp\left\{-\frac{(x-\mu)^2}{2\sigma^2}\right\} \]

\(X\) can take any value between \(-\infty\) and \(\infty\)

\(X\sim N(\mu, \sigma^2)\)

Distribution (\(\mu\) = 34, \(\sigma^2\) = 5)

Code

a <- 25

b <- 45

x <- seq(a, b, length.out = 1000)

p1 <- dnorm(x, 34, sqrt(5)) |> tibble(x = x, y = _) |>

ggplot(aes(x, y)) +

geom_line() +

ylab("Density") +

ggtitle("PDF") +

theme_bw()

p2 <- pnorm(x, 34, sqrt(5)) |> tibble(x = x, y = _) |>

ggplot(aes(x,y)) +

geom_line() +

theme_bw() +

ggtitle("CDF") +

ylab(paste0("P(X","\u2264"," x)"))

p1 + p2

Distribution (\(\mu\) = -8, \(\sigma^2\) = 10)

Code

a <- -20

b <- 4

x <- seq(a, b, length.out = 1000)

p1 <- dnorm(x, -8, sqrt(10)) |> tibble(x = x, y = _) |>

ggplot(aes(x, y)) +

geom_line() +

ylab("Density") +

ggtitle("PDF") +

theme_bw()

p2 <- pnorm(x, -8, sqrt(10)) |> tibble(x = x, y = _) |>

ggplot(aes(x,y)) +

geom_line() +

theme_bw() +

ggtitle("CDF") +

ylab(paste0("P(X","\u2264"," x)"))

p1 + p2

Expectations

\[ E(X) = \mu \]

\[ Var(X) = \sigma^2 \]

Gamma Distribution

A gamma random variable is characterized by the gamma distribution, used to model waiting times or the time until an event occurs a certain number of times.

\[ f(x; \alpha, \beta) = \frac{x^{\alpha - 1} e^{-x/\beta}}{\beta^\alpha \Gamma(\alpha)} \]

\[ \Gamma(\alpha) = \int_0^\infty t^{\alpha - 1} e^{-t} \, dt \]

\(X\) can take any value between 0 and \(\infty\)

\(X\sim Gamma(\alpha,\beta)\)

Expectations

\[ E(X) = \alpha \beta \]

\[ \text{Var}(X) = \alpha \beta^2 \]

Distribution (\(\alpha\) = 1.5, \(\beta\) = 2.6)

Code

y <- rgamma(1000, 1.5, 2.6)

a <- 0

b <- 10

x <- seq(a, b, length.out = 1000)

p1 <- dgamma(x, 1.5, 2.6) |> tibble(x = x, y = _) |>

ggplot(aes(x, y)) +

geom_line() +

ylab("Density") +

ggtitle("PDF") +

theme_bw()

p2 <- pgamma(x, 1.5, 2.6) |> tibble(x = x, y = _) |>

ggplot(aes(x,y)) +

geom_line() +

theme_bw() +

ggtitle("CDF") +

ylab(paste0("P(X","\u2264"," x)"))

p1 + p2

Distribution (\(\alpha\) = 3.5, \(\beta\) = 1.6)

Code

y <- rgamma(1000, 3.5, 1.6)

a <- 0

b <- 10

x <- seq(a, b, length.out = 1000)

p1 <- dgamma(x, 3.5, 1.6) |> tibble(x = x, y = _) |>

ggplot(aes(x, y)) +

geom_line() +

ylab("Density") +

ggtitle("PDF") +

theme_bw()

p2 <- pgamma(x, 3.5, 1.6) |> tibble(x = x, y = _) |>

ggplot(aes(x,y)) +

geom_line() +

theme_bw() +

ggtitle("CDF") +

ylab(paste0("P(X","\u2264"," x)"))

p1 + p2

Distribution (\(\alpha\) = 5.2, \(\beta\) = 1.6)

Code

y <- rgamma(1000, 5.2, 1.6)

a <- 0

b <- 10

x <- seq(a, b, length.out = 1000)

p1 <- dgamma(x, 5.2, 1.6) |> tibble(x = x, y = _) |>

ggplot(aes(x, y)) +

geom_line() +

ylab("Density") +

ggtitle("PDF") +

theme_bw()

p2 <- pgamma(x, 5.2, 1.6) |> tibble(x = x, y = _) |>

ggplot(aes(x,y)) +

geom_line() +

theme_bw() +

ggtitle("CDF") +

ylab(paste0("P(X","\u2264"," x)"))

p1 + p2

Beta Distribution

The beta distribution is often used to model random variables that represent proportions or probabilities.

\[ f(x; \alpha, \beta) = \frac{x^{\alpha - 1} (1 - x)^{\beta - 1}}{B(\alpha, \beta)} \]

\[ B(\alpha, \beta) = \int_0^1 t^{\alpha - 1} (1 - t)^{\beta - 1} \, dt \]

\(X\) can take a value between 0 and 1

\(X\sim Beta(\alpha,\beta)\)

Expectations

\[ E(X) = \frac{\alpha}{\alpha + \beta} \]

\[ \text{Var}(X) = \frac{\alpha \beta}{(\alpha + \beta)^2 (\alpha + \beta + 1)} \]

Distribution (\(\alpha\) = 5.2, \(\beta\) = 1.6)

Code

y <- rbeta(1000, 5.2, 1.6)

a <- 0

b <- 1

x <- seq(a, b, length.out = 1000)

p1 <- dbeta(x, 5.2, 1.6) |> tibble(x = x, y = _) |>

ggplot(aes(x, y)) +

geom_line() +

ylab("Density") +

ggtitle("PDF") +

theme_bw()

p2 <- pbeta(x, 5.2, 1.6) |> tibble(x = x, y = _) |>

ggplot(aes(x,y)) +

geom_line() +

theme_bw() +

ggtitle("CDF") +

ylab(paste0("P(X","\u2264"," x)"))

p1 + p2

Distribution (\(\alpha\) = 1.3, \(\beta\) = 1.6)

Code

y <- rbeta(1000, 1.3, 1.6)

a <- 0

b <- 1

x <- seq(a, b, length.out = 1000)

p1 <- dbeta(x, 1.3, 1.6) |> tibble(x = x, y = _) |>

ggplot(aes(x, y)) +

geom_line() +

ylab("Density") +

ggtitle("PDF") +

theme_bw()

p2 <- pbeta(x, 1.3, 1.6) |> tibble(x = x, y = _) |>

ggplot(aes(x,y)) +

geom_line() +

theme_bw() +

ggtitle("CDF") +

ylab(paste0("P(X","\u2264"," x)"))

p1 + p2

Distribution (\(\alpha\) = .72, \(\beta\) = .76)

Code

a <- .72

b <- .76

x <- seq(0, 1, length.out = 1000)

p1 <- dbeta(x, a, b) |> tibble(x = x, y = _) |>

ggplot(aes(x, y)) +

geom_line() +

ylab("Density") +

ggtitle("PDF") +

theme_bw()

p2 <- pbeta(x, a, b) |> tibble(x = x, y = _) |>

ggplot(aes(x,y)) +

geom_line() +

theme_bw() +

ggtitle("CDF") +

ylab(paste0("P(X","\u2264"," x)"))

p1 + p2

Distributions in R

Several common distributions can be utilized in R with the 4 common functions:

| Letter | Functionality |

|---|---|

d |

returns the height of the probability density/mass function |

p |

returns the cumulative density function value |

q |

returns the inverse cumulative density function (percentiles) |

r |

returns a randomly generated number |

Random Number Generator

Random Variables

Random Number Generator

Random Variable Generations

Transformation Methods

Central Limit Theorem

Generating Random Numbers

A number is an outcome from a random experiment.

Random experiment is an experiment where the outcome is not predicted.

The outcomes have a probability of being observed, whether equal or not.

Generating Random Numbers

Generating Random Numbers

Generating Random Numbers

Generating Random Numbers

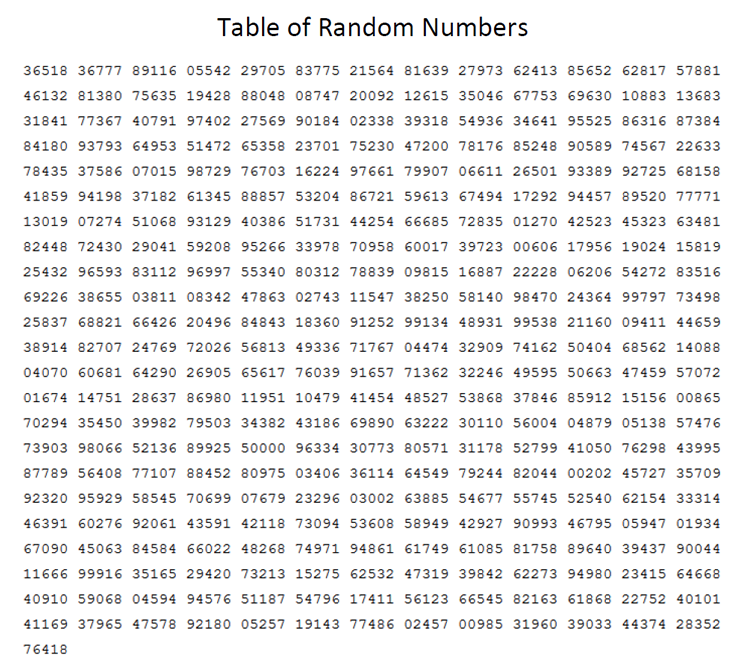

Psuedo Random Numbers

These methods are considered time-consuming when a large number values are necessary.

With the advent of computers, random number can be generated with the use deterministic algorithms, where a mechanism is used to make it random, such as time.

Computer-generated random numbers are considered psuedo random numbers because an algorithm is used to generate them given an initial single value, known as a seed.

Supplying a seed to a random number generator will ensure that the same numbers are produced every time.

Mersenne Twister

The Mersenne Twister is a widely used pseudorandom number generator (PRNG) known for its high quality and efficiency. It was developed by Makoto Matsumoto and Takuji Nishimura in 1997.

The default random number generator in R.

Uniform Distribution R

Random Variable Generations

Random Variables

Random Number Generator

Random Variable Generations

Transformation Methods

Central Limit Theorem

Random Variable Generation

Several distribution, common and uncommon, can be generated using a uniform random variables.

More complex distributions may require the use of common distributions.

Inverse-Transform Method

Inverse-Transformation Algorithm

- Generate a random value \(U\) that follows a \(U(0,1)\)

- Using the CDF (\(F(X)\)) for random variable \(X\), compute:

\[ X = F^{-1}(U) \]

Exponential Distribution

An exponential random variable is characterized by the exponential distribution, used to model waiting times or the time until an event occurs a certain number of times.

The exponential distribution is a gamma random variable with \(\alpha = 1\).

Exponential Distribution

\[ f(x) = \frac{1}{\lambda} \exp\left\{-\frac{x}{\lambda}\right\} \]

\[ F(x) = 1-\exp\left\{-\frac{x}{\lambda}\right\} \]

\[ F^{-1}(x) = -\lambda \log(1-x) \]

Simulating an Exponential RV

\[ X \sim Exp(2) \]

Simulating an Exponential RV

Simulating an Exponential RV

Exponential RV in R

Discrete RV Inverse-Transformations

- Generate a random value \(U\) that follows a \(U(0,1)\)

- Using the CDF (\(F(X)\)), find the smallest integer value \(k\) such that:

\[ U \leq F(k) \] 3. \(X \leftarrow k\)

Poisson Distribution

Poisson Distribution

Code

finder <- function(u){

x <- 0

condition <- TRUE

while (condition) {

uu <- ppois(x, lambda = 6)

condition <- uu <= u

if(condition){

x <- x + 1

}

}

return(x)

}

xx <- sapply(u, finder)

xx |> tibble(x = _) |>

ggplot(aes(x=x, y = ..density..)) +

geom_histogram(bins = 21) +

geom_step(data = tibble(x = xe, y = dpois(xe, lambda = 6)),

mapping = aes(x,y)) +

theme_bw()

Exponential RV in R

Normal Distribution

Obtaining the inverse distribution function of a normal distribution requires the use of numeric algorithms.

Therefore it is computationally inefficient to use the inverse-transformation algorithm to generate normal random variables.

The Box-Muller algorithm was developed to generate 2 standard normal (\(N(0,1)\)) random variables from uniform random variables.

Normal Distribution

\[ y = \int^x_{-\infty} \frac{1}{\sqrt{2\pi}} \exp\left\{-\frac{z^2}{2}\right\}dz \]

Box-Muller Algorithm

- Generate 2 independent random variables from \(U(0,1)\), \(U_1\) and \(U_2\)

- \(X_1 = (-2 \log(U_1))^{1/2}\cos(2\pi U_2)\)

- \(X_2 = (-2 \log(U_1))^{1/2}\sin(2\pi U_2)\)

Both \(X_1\) and \(X_2\) are independent \(N(0,1)\)

Normal Distribution R

Accept-Reject Algorithm

The Accept-Reject algorithm allows you to generate noncommon random variable by simulating from a common random variable.

Algorithm Set Up

Let \(X\) be the random variable, that is difficult to generate, you want to generate with a pdf \(f(x)\).

Let \(Y\) be an easily generated random variable with a pdf \(g(y)\). That follows the same support as \(f(x)\)

Lastly, multiply \(g(y)\) with a constant \(c\) such that \(f(y)\leq cg(y)\).

Algorithm

- Generate \(Y\) with a pdf of \(g(y)\)

- Generate \(U\) from \(U(0, cg(y))\)

- Accept-Reject

- Accept: \(U\leq f(y)\); \(Y \rightarrow X\)

- Reject: \(U>f(y)\); repeat the algorithm

Modified Algorithm

- Generate \(Y\) with a pdf of \(g(y)\)

- Generate \(U\) from \(U(0,1)\)

- Accept-Reject

- Accept: \(U\leq f(y)/(cg(y))\); \(Y \rightarrow X\)

- Reject: \(U>f(y)/(cg(y))\); repeat the algorithm

Gamma Random Variable

Gamma RV

Code

xe <- seq(0, 20, length.out = 1000)

x <- rexp(100000)

x |> tibble(x = _) |>

ggplot(aes(x=x, y = ..density..)) +

geom_histogram(aes(color = "Exponential")) +

geom_line(data = tibble(x = xe,

y = dgamma(x, shape = 2.3, scale = 1.2)),

aes(x,y, color = "Gamma")) +

ylab("Density") +

theme_bw() +

theme(legend.position = "bottom",

legend.title = element_blank())

Gamma RV

Code

xe <- seq(0, 20, length.out = 1000)

xe |> tibble(x = _) |>

ggplot(aes(x=x, y = dgamma(x, shape = 2.3, scale = 1.2))) +

geom_line(aes(color = "Gamma")) +

geom_line(data = tibble(x = xe, y = dexp(xe, 1/3)), aes(x,y, color = "Exponential")) +

ylab("Density") +

theme_bw() +

theme(legend.position = "bottom",

legend.title = element_blank())

Accept-Reject Gamma RV

Code

xe <- seq(0, 20, length.out = 1000)

xe |> tibble(x = _) |>

ggplot(aes(x=x, y = dgamma(x, shape = 2.3, scale = 1.2))) +

geom_line(aes(color = "Gamma")) +

geom_line(data = tibble(x = xe, y = 1.5*dexp(xe, 1/3)), aes(x,y, color = "Exponential")) +

ylab("Density") +

theme_bw() +

theme(legend.position = "bottom",

legend.title = element_blank())

Accept-Reject Gamma RV

Code

xe <- seq(0, 20, length.out = 1000)

xe |> tibble(x = _) |>

ggplot(aes(x=x, y = dgamma(x, shape = 2.3, scale = 1.2))) +

geom_line(aes(color = "Gamma")) +

geom_line(data = tibble(x = xe, y = 3*dexp(xe, 1/3)), aes(x,y, color = "Exponential")) +

ylab("Density") +

theme_bw() +

theme(legend.position = "bottom",

legend.title = element_blank())

Accept-Reject Gamma RV

Gamma RV

Code

x |> tibble(x = _) |>

ggplot(aes(x=x, y = ..density..)) +

geom_histogram(aes(color = "Exponential")) +

geom_line(data = tibble(x = xe,

y = dgamma(x, shape = 2.3, scale = 1.2)),

aes(x,y, color = "Gamma")) +

ylab("Density") +

theme_bw() +

theme(legend.position = "bottom",

legend.title = element_blank())

Gamma Distribution R

Beta RV in R

Bernoulli RV in R

Binomial RV in R

Negative Binomial RV in R

Transformation Methods

Random Variables

Random Number Generator

Random Variable Generations

Transformation Methods

Central Limit Theorem

\(N(0,1)\)

\[ X \sim N(\mu, \sigma^2) \]

\[ Z = \frac{X-\mu}{\sigma} \sim N(0,1) \]

\(N(\mu, \sigma^2)\)

\[ Z \sim N(0,1) \]

\[ X = Z\sigma + \mu \sim N(\mu, \sigma^2) \]

\(\chi^2(1)\)

\[ Z \sim N(0,1) \]

\[ Z^2 \sim \chi^2(1) \]

\(F(m,n)\)

\[ U \sim \chi^2(m) \]

\[ V \sim \chi^2(n) \]

\[ F = \frac{U/m}{V/n} \sim F(m,n) \]

\(t(n)\)

\[ Z \sim N(0,1) \]

\[ U \sim \chi^2(m) \]

\[ T = \frac{Z}{\sqrt{U/m}} \sim t(n) \]

\(Beta(\alpha, \beta)\)

\[ U \sim Gamma(\alpha,\lambda) \]

\[ V \sim Gamma(\beta,\lambda) \]

\[ X = \frac{U}{U+V} \sim Beta(\alpha,\beta) \]

Central Limit Theorem

Random Variables

Random Number Generator

Random Variable Generations

Transformation Methods

Central Limit Theorem

Central Limit Theorem

If random variables \(X_1, X_2, \cdots, X_n\) are independent come from the same distribution (\(iid\)), \(E(X_i) = \mu <\infty\) (finite), \(Var(X_i) = \sigma^2<\infty\) (finite), then

\[ \bar X \sim N(\mu, \sigma^2/n) \]

Normal Distribution

For \(n\) random variables:

\[ X_i \sim N(4, 32) \] By CLT

\[ \bar X \sim N(4, 32 / n) \]

Normal Distribution

Code

norm <- function(x){

rnorm(10000, 4, sqrt(32))

}

X <- sapply(1:1000, norm)

Xbar <- apply(X, 2, mean)

xx <- seq(min(Xbar)-.1, max(Xbar)+.1, length.out = 100)

Xbar |> tibble(x = _) |>

ggplot(aes(x=x, y = ..density..)) +

geom_histogram() +

geom_line(data = tibble(x = xx, y = dnorm(xx, 4, sqrt(32/10000))),

aes(x, y))Normal Distribution

Code

norm <- function(x){

rnorm(10000, 4, sqrt(32))

}

X <- sapply(1:1000, norm)

Xbar <- apply(X, 2, mean)

xx <- seq(min(Xbar)-.1, max(Xbar)+.1, length.out = 100)

Xbar |> tibble(x = _) |>

ggplot(aes(x=x, y = ..density..)) +

geom_histogram() +

geom_line(data = tibble(x = xx, y = dnorm(xx, 4, sqrt(32/10000))),

aes(x, y)) +

theme_bw()

Poisson Distribution

Code

norm <- function(x){

rpois(10000, 8.5)

}

X <- sapply(1:1000, norm)

Xbar <- apply(X, 2, mean)

xx <- seq(min(Xbar)-.1, max(Xbar)+.1, length.out = 100)

Xbar |> tibble(x = _) |>

ggplot(aes(x=x, y = ..density..)) +

geom_histogram() +

geom_line(data = tibble(x = xx, y = dnorm(xx, 8.5, sqrt(8.5/10000))),

aes(x, y))Gamma Distribution

Code

norm <- function(x){

rgamma(10000, shape = 5, scale = 2)

}

X <- sapply(1:1000, norm)

Xbar <- apply(X, 2, mean)

xx <- seq(min(Xbar)-.1, max(Xbar)+.1, length.out = 100)

Xbar |> tibble(x = _) |>

ggplot(aes(x=x, y = ..density..)) +

geom_histogram() +

geom_line(data = tibble(x = xx, y = dnorm(xx, 10, sqrt(20/10000))),

aes(x, y))Cauchy Distribution